Shenzhen MindOn Robotics is testing their new hardware and software on the Unitree G1 humanoid robot with the goal of learning human-like household chores like watering plants, moving packages, tidying up, etc. This is still a proof of concept rather than a finished product.

Title: MindOn Robotics Takes Giant Leap with Unitree G1: Humanoid Robot Learning Household Chores

Meta Description: Discover how Shenzhen MindOn Robotics is training the Unitree G1 humanoid robot to perform household tasks like watering plants and tidying up—a groundbreaking proof of concept for the future of domestic robotics.

Shenzhen MindOn Robotics Pioneers Domestic Humanoid Robots with Unitree G1 Prototype

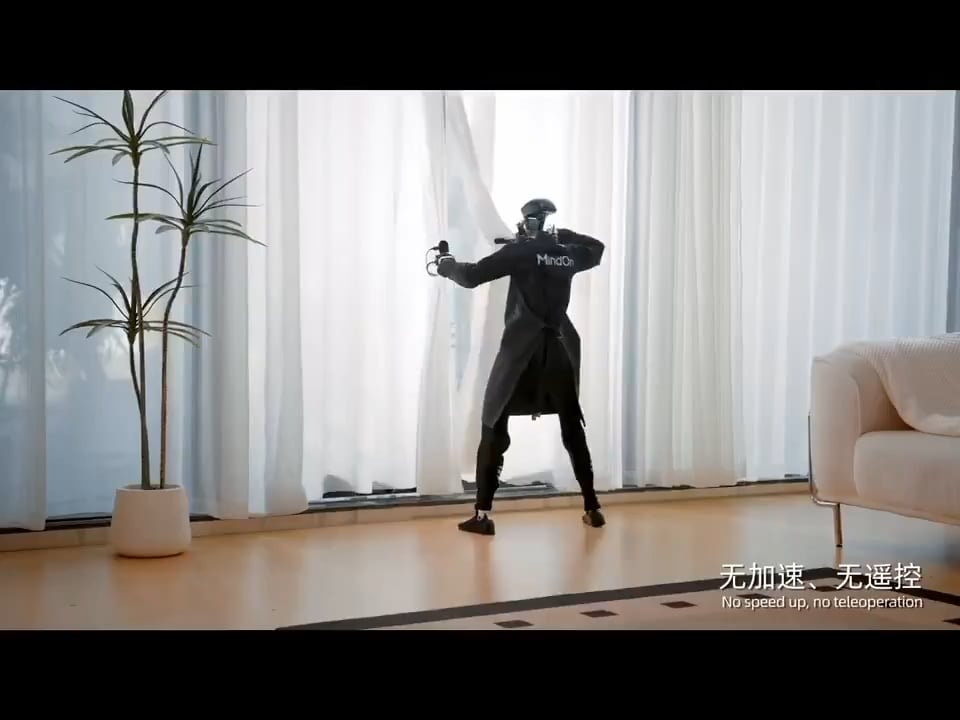

The race to create robots capable of seamlessly integrating into human environments just hit a major milestone. Shenzhen MindOn Robotics, a rising innovator in intelligent automation, has begun testing its proprietary hardware and software on the Unitree G1 humanoid robot platform, with a mission to teach it human-like household chores. From watering plants to moving packages and organizing living spaces, this proof-of-concept project could redefine how robots assist in everyday life.

Why the Unitree G1? A Perfect Platform for Domestic Experimentation

The Unitree G1, known for its agility, affordability, and modular design, serves as the ideal testbed for MindOn Robotics’ vision. Standing at roughly human height, this bipedal robot features advanced actuators, stereo vision cameras, and tactile sensors—capabilities that enable it to navigate complex indoor environments. MindOn’s engineers leverage these features while integrating custom upgrades, including:

- Enhanced AI-Driven Perception Systems: Improved object recognition for identifying items like potted plants, boxes, or cluttered surfaces.

- Adaptive Manipulation Algorithms: Fine-tuning grip strength and motion to handle fragile objects (e.g., watering cans) or bulky packages without damage.

- Real-Time Spatial Mapping: Using lidar and visual SLAM (Simultaneous Localization and Mapping) to navigate tight spaces like kitchens or living rooms.

While still in early testing, MindOn’s prototype demonstrates remarkable fluidity in imitation learning—a technique where robots replicate tasks by observing human demonstrations. Early videos show the G1 tentatively watering a plant or stacking boxes, though movements remain deliberate and occasionally unrefined.

From Labs to Living Rooms: How the Robot Learns Chores

MindOn’s approach focuses on breaking down household tasks into modular steps, combining reinforcement learning (trial-and-error rewards) with computer vision. For example:

-

Watering Plants:

- Identify plant location via cameras.

- Locate and grip a watering can (adjusting pressure to avoid spills).

- Pour water steadily while avoiding furniture collisions.

-

Tidying Up:

- Classify objects as “toys,” “dishes,” or “clutter.”

- Place items into designated bins or shelves based on pre-set rules.

-

Moving Packages:

- Calculate weight distribution before lifting.

- Navigate doorways and stairs using dynamic balance control.

Challenges Remain: Inconsistent lighting, variable object shapes, and unexpected obstacles (e.g., pets) create hurdles. “This isn’t about perfection yet,” says a MindOn spokesperson. “It’s about teaching robots to adapt—like humans do.”

A Proof of Concept with Massive Implications

MindOn stresses that this project is not a finished consumer product but a critical step toward affordable, multi-functional home robots. If successful, the technology could:

- Elder Care: Assist aging populations with daily tasks like fetching items or cleaning.

- Logistics: Enable robots to sort and move packages in warehouses before entering homes.

- Cost Reduction: Leveraging the Unitree G1’s open-source-friendly design keeps R&D expenses low, aiming for eventual consumer accessibility.

Industry analysts note that while giants like Boston Dynamics (Atlas) and Tesla (Optimus) dominate headlines, startups like MindOn are pushing practical, small-scale applications. “Household chores are an untapped frontier,” says robotics expert Dr. Lisa Yang. “Simpler tasks today could lead to fully autonomous companions tomorrow.”

What’s Next for MindOn Robotics and the G1?

MindOn plans to expand testing to more complex chores (e.g., folding laundry, basic meal prep) in 2024. Key milestones include:

- Improving Failure Recovery: Teaching the robot to correct mistakes (e.g., spilling water) autonomously.

- Multi-Task Workflows: Chaining actions (e.g., “tidy room → water plants → take out trash”) without reprogramming.

- Public Demos: Showcasing progress at tech expos to attract partnerships.

The team also emphasizes ethical AI development, ensuring robots prioritize safety and human oversight—a crucial consideration as machines enter intimate spaces like homes.

Conclusion: The Future of Home Robotics Starts Now

Shenzhen MindOn Robotics’ work on the Unitree G1 offers a tantalizing glimpse into a future where humanoid robots handle mundane chores, freeing humans for creativity and connection. While years of refinement lie ahead, this proof of concept proves that general-purpose domestic robots aren’t just sci-fi—they’re a rapidly approaching reality.

As MindOn iterates on its hardware-software synergy, the dream of a robot that waters your plants while you sip coffee inches closer to the mainstream. Stay tuned for updates—the next era of home automation is learning its first steps.

Keywords for SEO:

Shenzhen MindOn Robotics, Unitree G1 humanoid robot, household chores robot, AI domestic assistant, robotics proof of concept, human-like robot tasks, home automation robots, future of home robotics, imitation learning robotics.